back to list

Project: Visualizing counterfactual explanations for exploration and interpretation

Description

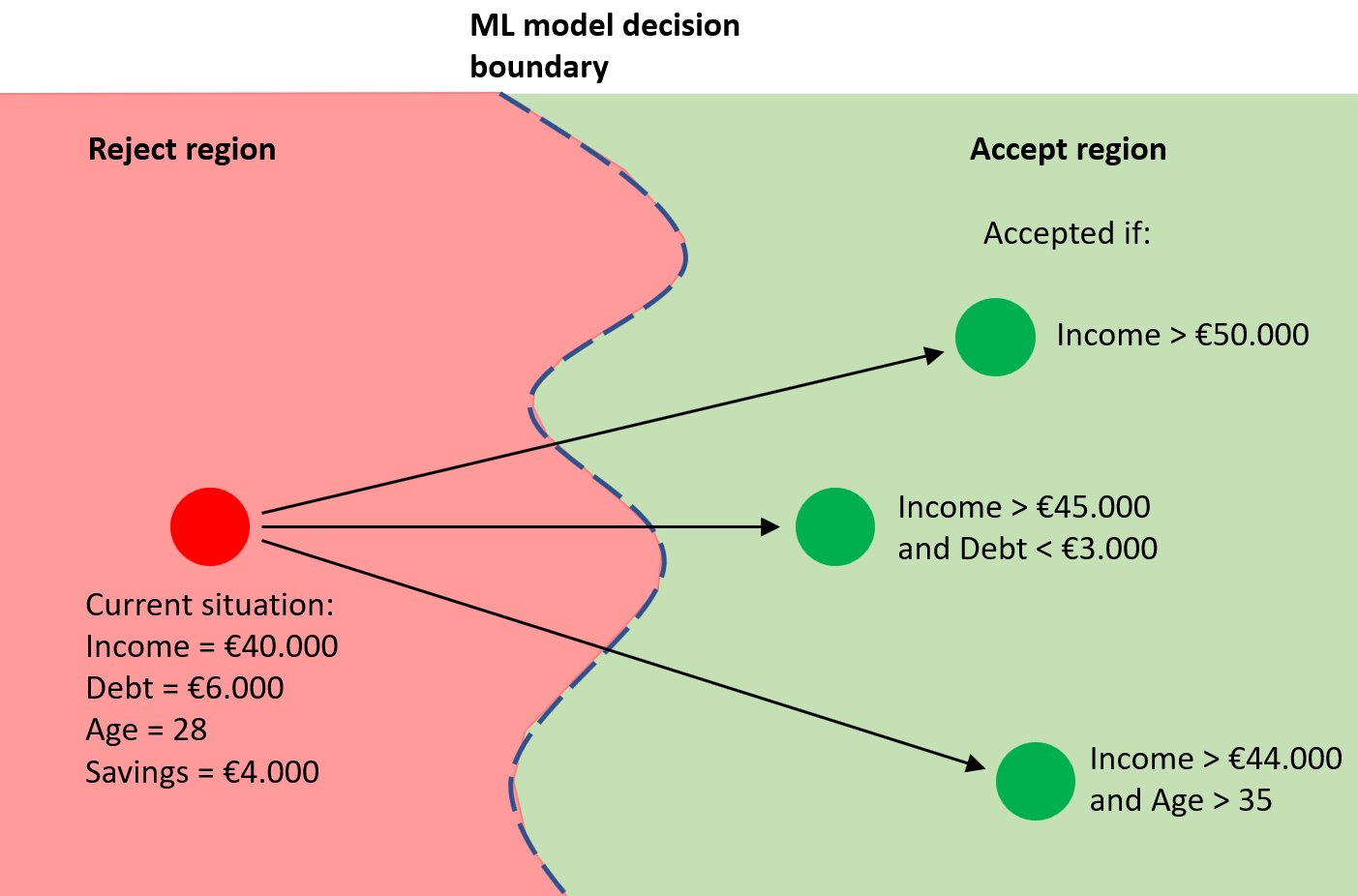

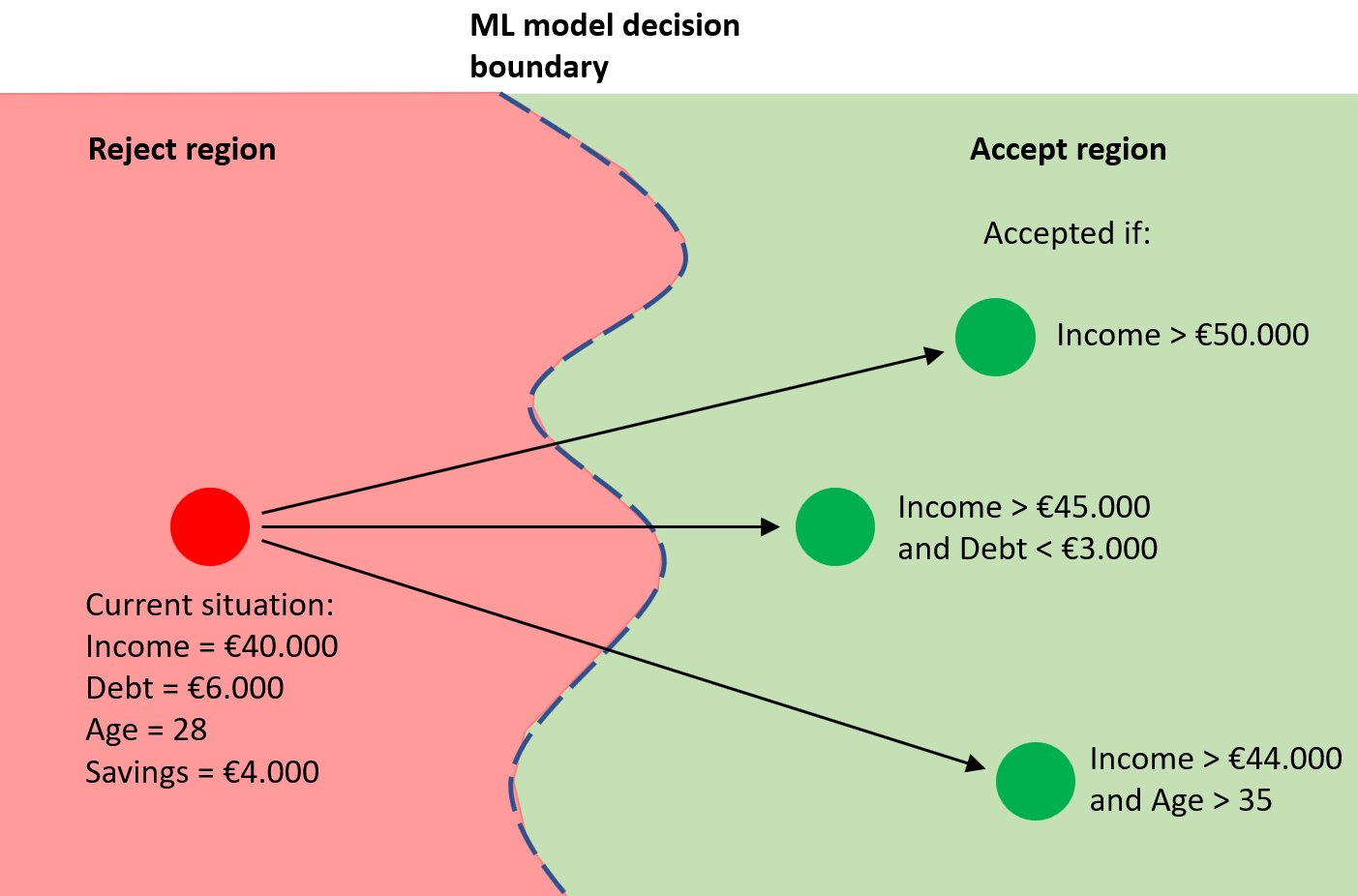

When a machine learning classification has been made and the user disagrees with the prediction, he or she wants to know what needs to be changed to get a (better) different outcome. Calculating this is a part of machine learning called counterfactuals and recourse. This calculation can be performed in many ways, and it's not clear what the best way is. The idea is that with domain knowledge, the user can determine what the 'best' way is. In this project the task is to devise a visualization for counterfactuals (using counterfactual algorithms) and to devise user-interactions to bring in domain knowledge and determine what the best counterfactual is (i.e., what needs to be changed to get a desired outcome from the ML model).

Details

- Student

-

Bart Hofmans

Bart Hofmans

- Supervisor

-

Stef van den Elzen

Stef van den Elzen

- Link

- Thesis