Project: Descriptive Analytics using Prediction Models

Description

The increasing use and adoption of machine learning prediction techniques by different societal sectors, from health to industry domains, have recently fomented the need to inspect prediction models for accountability. This trend is usually referred to as explainable AI (xAI), and Visual Analytics (VA) tools have shown promising results by helping incorporate users in this process.

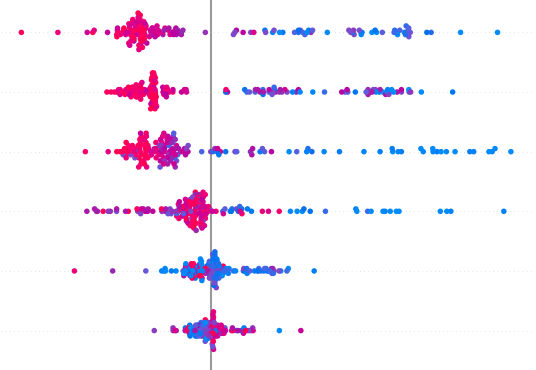

In the current literature, such VA xAI techniques have also been used as a proxy to understand the data, so acting as descriptive tools instead of solely predictive mechanisms. Despite this common use, detailed information is lost when explaining data since classifiers focus on separating classes, not internal data structures (or patterns). In this project, we will investigate this phenomenon and propose a solution where classifiers can be adequately used for descriptive analytics, focusing on also capturing (internal) class data patterns.

Details

- Student

-

JLJoey Lensen

- Supervisor

-

Fernando Paulovich

Fernando Paulovich