Project: Visual Analysis of Classification Ensemble Models Results

Description

Ensembles are compositions of standard classifiers taking advantage of classifiers' different characteristics by combining individual predictions into a single classification outcome. With the need to audit results produced by automated techniques, the demand to explain the results of ensemble models has emerged in recent years. However, unlike standard classifiers, the challenge is supporting such auditing and interpretability when multiple models are combined to provide one result.

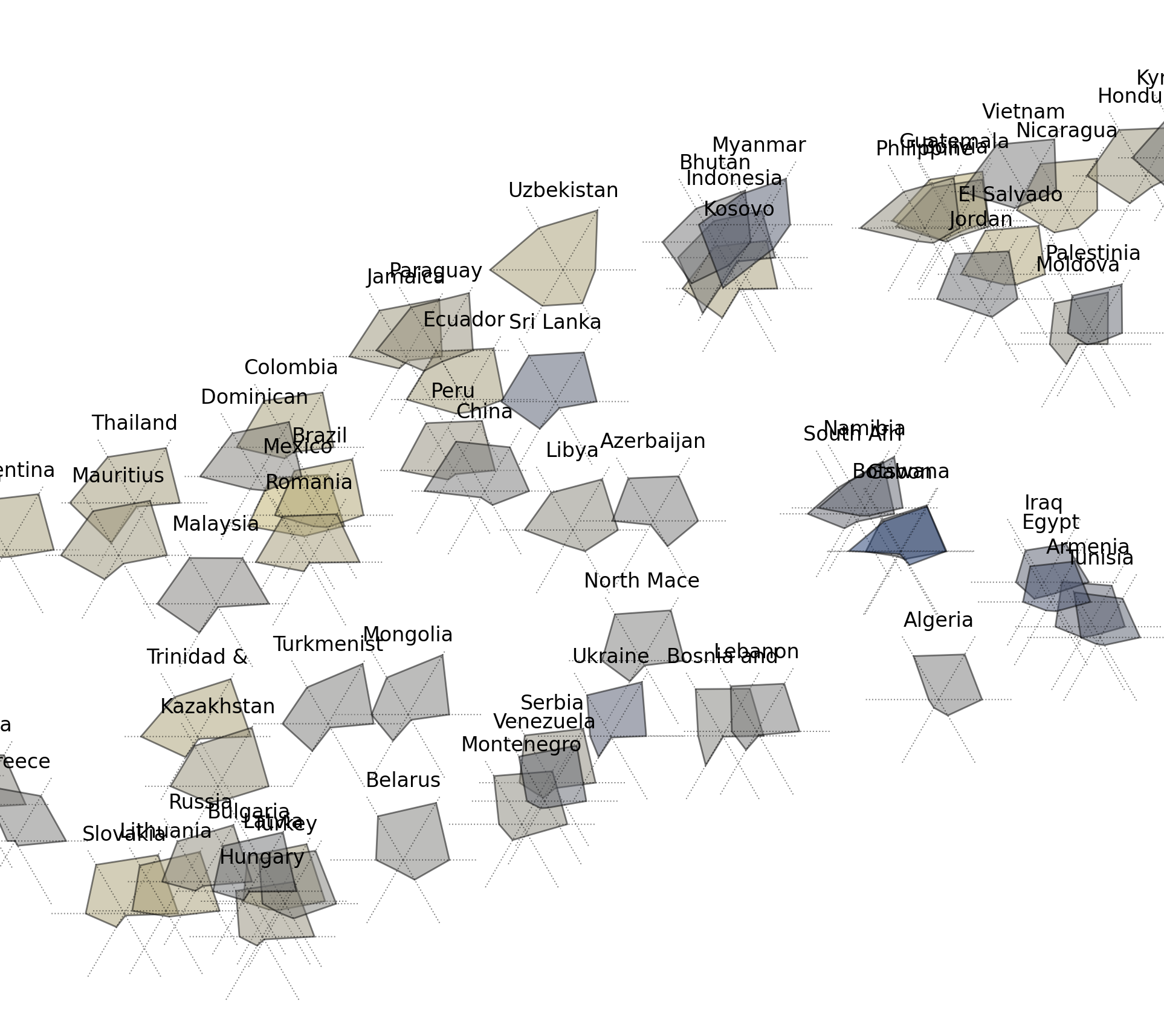

In this project, we will investigate how dimensionality reduction, well-designed glyphs, and usual xAI techniques for explaining classifiers can be used together within a Visual Analytics (VA) framework to support the interpretability of ensembles. The goal is to provide a multi-level process where ensembles' reasoning (global interpretability) can be investigated, and the predictions of the individual classifiers can be audited (local interpretability).

Details

- Student

-

PFPaolo Franken

- Supervisor

-

Fernando Paulovich

Fernando Paulovich