back to list

Project: Vision-Based Robotic Perception and Physics-Informed Scene Representation

Description

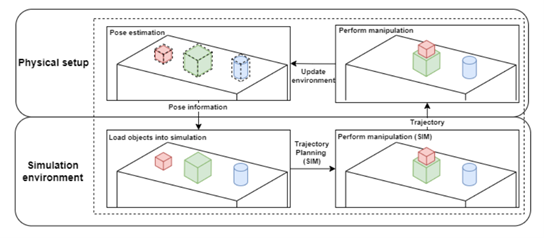

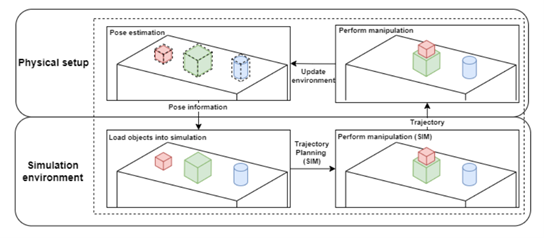

This project aims to design a physics-based simulation environment that dynamically represents a robot capable of performing vision-guided manipulation of rigid objects. Object poses have to be estimated with the use of an RGB(D) camera.

The process should be fully autonomous. In order to perform safe manipulation, the pose information of objects will be represented in a simulated environment.

This information should be dynamically updated in order to make sure the robot is capable of performing tasks, without causing potential damage to the robot.

Within simulation, the picking, and placing poses of objects will be predicted.

Details

- Student

-

Stefan van der Palen

Stefan van der Palen

- Supervisor

-

Andrei Jalba

Andrei Jalba

- Secondary supervisor

-

ASAlessandro Saccon (TU/e Mechanical Engineering)

- Link

- Thesis