Project: Developing and Optimizing Deep Learning Detection Algorithms for Medical Applications with Visual Analytics

Description

The main subject that will be investigated during this project is the explain-ability of black-box deep learning models using visual analytics techniques. The main goal will be to successfully build a framework in which model architects and developers can explore the inner workings of the networks during the design, training, adjustment, and retraining phases that follow one another in the model construction phase. This has been and continues to be an intensively researched area in the industry ever since machine learning started becoming more and more powerful and complex. There are two main reasons for this tendency: model developers need to be able to see deep learning models as close to white-box models as possible and once a model is developed the model needs to be explainable and validated for the industry experts that will use it.

The main area in which this project will be applied is medical, more specifically cancer detection in scans. The project will be a partnership between the research group of Video Coding and Architecture from the department of Electrical Engineering and Visualization research group from the department of Mathematics and Computer Science. Even though the framework could potentially be used by researchers on various networks, to specialize for the particularities of the type networks the researchers from Video Coding and Architecture use, an initial network will be chosen to be used for the analysis. We aim at supporting networks that are currently used by researchers we collaborate with for various cancer detection that include esophagus, lungs, colon or in a more general view, a network that uses wavelength transformations to perform noise reduction on CT scans which considerably improves the detection networks results.

The goal of the preparation phase will be two explore three main challenges: defining what type of network will be used, investigate what visual analytics methods shall be used to extract as much insight in the network as possible, and have an insight on how this tool can be implemented.

References

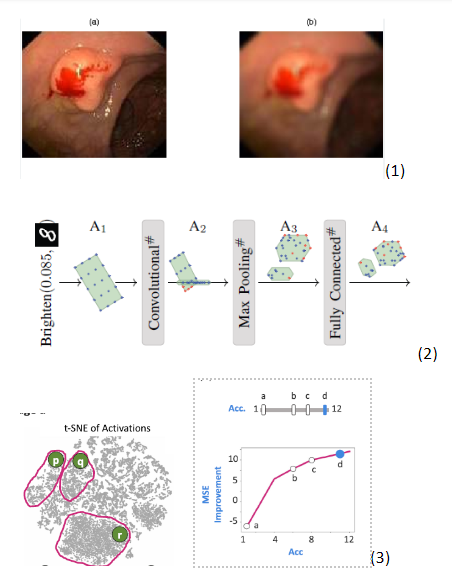

(1) Liu, Haiying, W.-S. Lu and Max Q.‐H. Meng. “De-blurring wireless capsule endoscopy images by total variation minimization.” Proceedings of 2011 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing (2011): 102-106.

(2) Gehr, Timon, Matthew Mirman, Dana Drachsler-Cohen, Petar Tsankov, Swarat Chaudhuri, and Martin Vechev. "Ai2: Safety and robustness certification of neural networks with abstract interpretation." In 2018 IEEE symposium on security and privacy (SP), pp. 3-18. IEEE, 2018.

(3) Prasad, Vidya, Ruud JG van Sloun, Stef van den Elzen, Anna Vilanova, and Nicola Pezzotti. "The transform-and-perform framework: Explainable deep learning beyond classification." IEEE Transactions on Visualization & Computer Graphics 30, no. 02 (2024): 1502-1515.

Details

- Student

-

Andreea Popa

Andreea Popa

- Supervisor

-

Anna Vilanova

Anna Vilanova

- Secondary supervisor

-

Vidya Prasad

Vidya Prasad