Project: Robust Online Environment Reconstruction with Multiple Cameras for Cobot Collision Avoidance

Description

Collaborative robots (Cobots) are used in, for instance, the assembly and packaging industry to help workers complete difficult or impossible repetitive tasks. To improve cobots they should be able to complete their tasks without colliding into dynamic objects. To avoid collision the cobot needs to be aware of the environment.

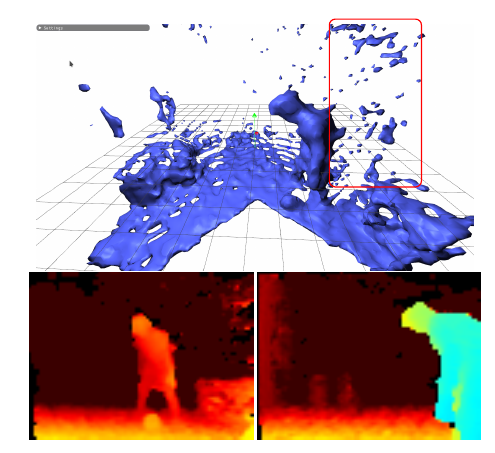

To improve the environment description multiple cameras are introduced. A framework and methodology are presented for the alignment of point clouds with the help of fiducial markers and precise manual refinement. To capture high-quality point clouds the depth noise was reduced by calibrating the camera. Depth noise resulting from stereophotogrammetry algorithm used by the depth cameras and “phantom points”, points with an incorrect depth value, causes problems in the reconstructed environment.

Details

- Student

-

MMMarc Meijer

- Supervisor

-

Andrei Jalba

Andrei Jalba

- Secondary supervisor

-

ASA. Saccon

- Link

- Thesis