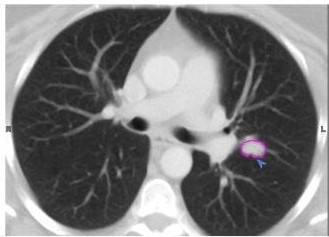

Project: Validation of uncertainty in DL models in context of lung nodules

Description

Deep Learning models can currently achieve state of the art performances in several medical image analysis benchmarks. However, modern Deep Learning architectures have been shown to be susceptible to generating overconfident predictions even in cases when the model generates wrong the wrong prediction. The adoption of Deep Learning in clinical practice would be greatly facilitated by extending Deep Learning models such that they can provide more information about the uncertainty in the predictions they are generating.

This problem has recently attracted attention in the research literature however little work has been done to validate such models in the Medical Image analysis domain. In this project the goal is to validate state-of-the-art methods that can provide information about the uncertainty of a Deep Learning model, in the context of Lung nodules in the LIDC-IDRI dataset. This dataset has annotations from multiple radiologists about the location, segmentation and also categorization of lung nodules. The types of uncertainty that the project will focus on are:

- Uncertainty related to the disagreement between multiple annotators. Can a Deep Learning model be used to predict this type of uncertainty?

- Uncertainty related to “borderline” cases. This dataset allows radiologists to assign a numeric score between 1-5 to characterize how “malignant” or “spiculated” a nodule is.

- Exploration of other modes of model uncertainty that can be derived from the available data (like scanner manufacturer, scan dose parameters, etc.)

Picture source: Kinahan, Paul & Thammasorn, Phawis & Chaovalitwongse, W. & Wu, Wei & Pipavath, Sudhakar & Pierce, Larry & Lampe, Paul & Houghton, A. & Haynor, David. (2018). Deep-learning derived features for lung nodule classification with limited datasets. 50. 10.1117/12.2293236.

Details

- Student

-

KMKirsten Maas

- Supervisor

-

Anna Vilanova

Anna Vilanova

- Secondary supervisor

-

NMNicola Pezzotti and Dimitrios Mavroeidis

- External location

- Philips

- Link

- Thesis